In the realm of industrial automation, quality control, and robotics, achieving precise distance measurement is a fundamental requirement. The advent of high-precision laser sensors has revolutionized this capability, enabling non-contact measurements with exceptional accuracy. Among the most demanding specifications is a tolerance of 0.01 units, typically millimeters or inches, which represents a benchmark for ultra-fine measurement tasks. This article delves into the technology behind laser sensors capable of such precision, their working principles, key applications, and factors influencing their 0.01 tolerance performance.

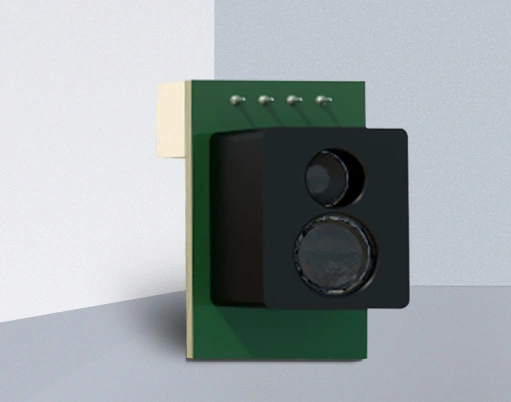

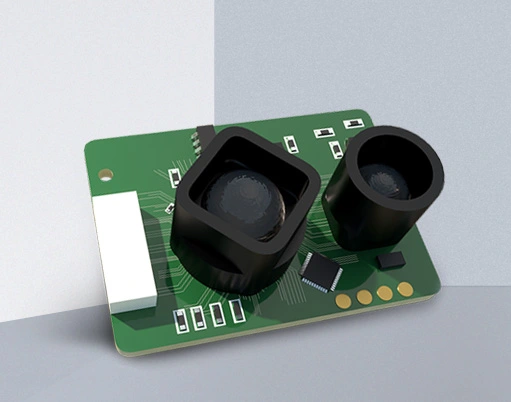

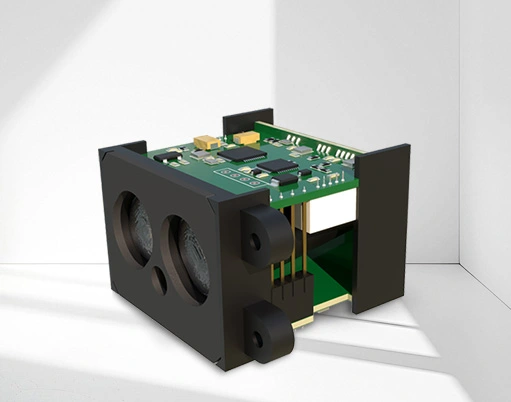

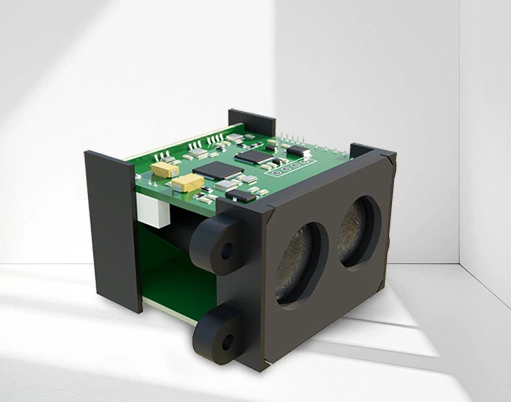

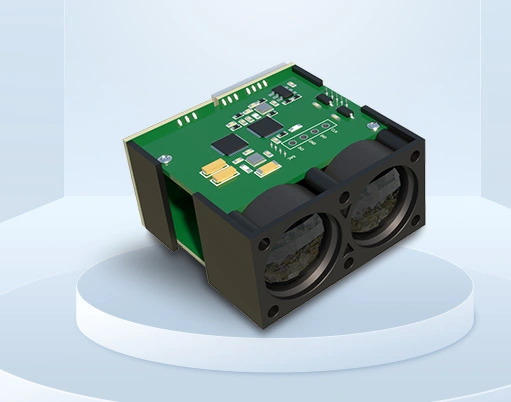

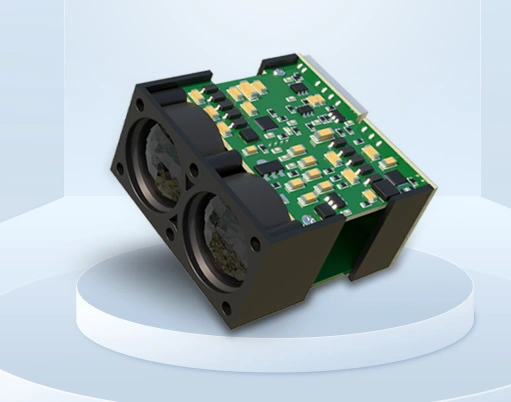

Laser distance sensors operate primarily on two principles: time-of-flight (ToF) and triangulation. For achieving a 0.01 tolerance, the triangulation method is often the preferred choice in short to medium-range applications. In this method, a laser diode emits a focused beam onto the target surface. The reflected light is then projected onto a position-sensitive detector, such as a CMOS or CCD array. Any change in the target's distance causes the reflected spot to shift its position on the detector. By calculating this shift with sophisticated algorithms, the sensor determines the exact distance. The core components—a stable laser source, a high-resolution optical detector, and advanced signal processing electronics—work in concert to achieve and maintain the stated 0.01 tolerance. This tolerance indicates the maximum permissible error between the measured value and the true distance, ensuring repeatability and reliability in critical processes.

The applications for laser sensors with 0.01 tolerance are vast and critical. In semiconductor manufacturing, they are used for wafer alignment and thickness inspection, where even micron-level deviations can lead to circuit failure. In precision machining, these sensors monitor tool wear and verify part dimensions in real-time, ensuring components like aerospace fittings or medical implants meet strict design specifications. Automated assembly lines rely on them for gap and flush measurement in automotive body panels, guaranteeing perfect fit and finish. Furthermore, in 3D scanning and metrology, they form the backbone of systems that capture detailed surface profiles for reverse engineering and quality assurance. The non-contact nature of laser measurement is crucial here, preventing any deformation or damage to sensitive or delicate objects during inspection.

Achieving and consistently delivering a 0.01 tolerance is not a simple feat and depends on several interrelated factors. Environmental conditions are paramount. Temperature fluctuations can cause thermal expansion in the sensor housing and optical components, potentially drifting the measurement. High-end sensors incorporate internal temperature compensation circuits to mitigate this. Ambient light, especially from other lasers or strong industrial lighting, can interfere with the sensor's receiver. Models designed for high precision often use modulated laser light and narrow-band optical filters to reject this noise. The target surface properties themselves play a significant role. A perfectly diffuse, matte white surface provides an ideal reflection. Shiny, reflective, or transparent surfaces (like glass or polished metal) can scatter or specularly reflect the beam, leading to measurement errors or dropouts. Using sensors with advanced teaching functions or diffuse reflection models can help compensate for varying surface finishes. Finally, mechanical stability is essential. Vibrations from machinery can affect both the sensor's mounting and the target, introducing noise into the measurement. Proper mounting on stable structures and using sensors with high sampling rates can help filter out vibrational effects.

When selecting a laser sensor for applications demanding 0.01 tolerance, several specifications beyond the basic range and accuracy must be evaluated. Resolution, which is the smallest detectable change in distance, should be significantly finer than the tolerance itself. Repeatability, the sensor's ability to produce the same reading for the same distance under unchanged conditions, is often more critical than absolute accuracy for process control. The response time or sampling rate determines how quickly the sensor can update its measurement, which is vital for high-speed production lines. Output interfaces (e.g., analog voltage/current, digital RS-422, Ethernet/IP, PROFINET) must integrate seamlessly with the existing control system. Additionally, considering sensors with IP67 or higher ratings ensures reliability in harsh industrial environments with dust, moisture, or coolant exposure.

In conclusion, laser sensors offering distance measurement with a 0.01 tolerance represent the pinnacle of non-contact metrology for industrial applications. Their ability to provide micron-level accuracy reliably transforms manufacturing and inspection processes, enhancing quality, reducing waste, and enabling automation of complex tasks. Success hinges on understanding the underlying technology, carefully considering the application's specific environmental and target conditions, and selecting a sensor whose full suite of specifications aligns with the stringent requirement. As technology advances, these sensors continue to push the boundaries of what is measurable, driving innovation across countless engineering fields.