Laser rangefinder sensors have become indispensable tools across numerous industries, from construction and surveying to autonomous vehicles and military applications. The accuracy of these sensors is paramount, as even minor deviations can lead to significant errors in measurement, impacting project outcomes, safety, and operational efficiency. Understanding the factors that influence laser rangefinder sensor accuracy and the methods to enhance it is crucial for professionals relying on precise distance data.

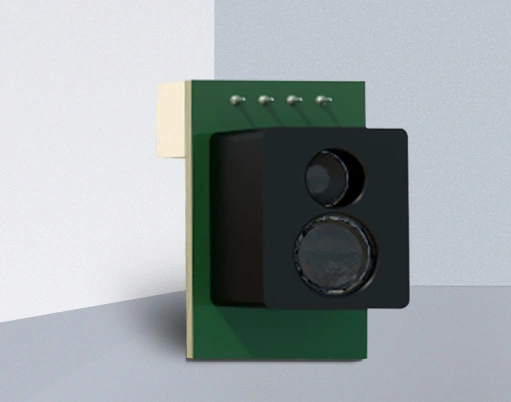

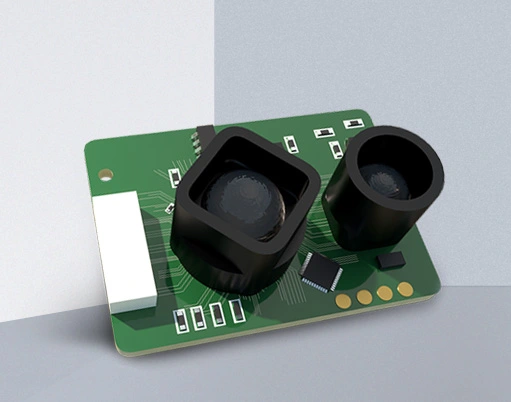

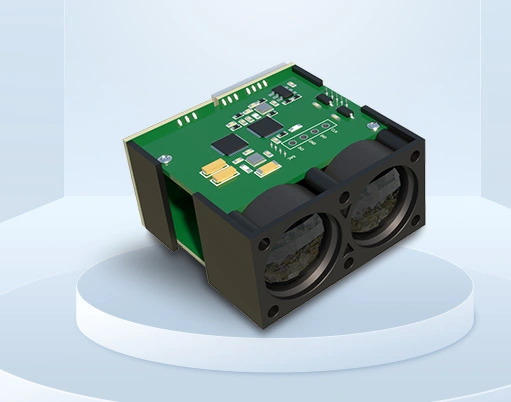

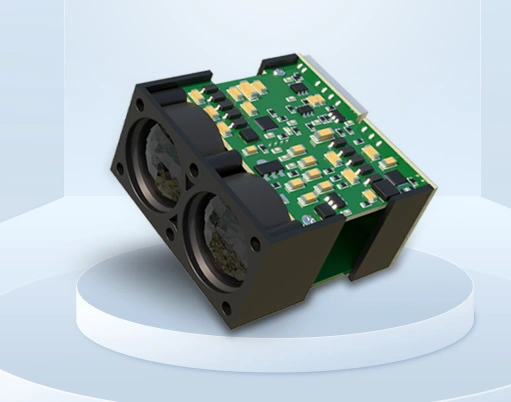

The core principle of a laser rangefinder involves emitting a laser pulse towards a target and measuring the time it takes for the pulse to reflect back to the sensor. This time-of-flight (ToF) measurement is then converted into a distance calculation. The fundamental accuracy of this process is influenced by several intrinsic and extrinsic factors.

One of the primary determinants of accuracy is the quality and stability of the laser source itself. The wavelength, beam divergence, and power consistency of the emitted laser pulse directly affect the sensor's ability to produce a clear, detectable return signal. Sensors using highly stable, narrow-beam lasers typically achieve better accuracy. Environmental conditions pose another significant challenge. Atmospheric factors such as fog, rain, dust, and extreme temperatures can attenuate or scatter the laser beam, introducing errors in the time-of-flight measurement. Advanced sensors often incorporate environmental compensation algorithms to mitigate these effects.

The reflectivity and characteristics of the target surface are equally critical. A dark, matte, or absorbent surface will reflect less light back to the sensor compared to a bright, reflective surface, potentially weakening the return signal to a point where it becomes difficult to detect accurately. This can result in longer measurement times or failed readings. Furthermore, the angle of incidence plays a role; measurements taken at a steep angle to the target surface are generally less accurate than those taken perpendicularly.

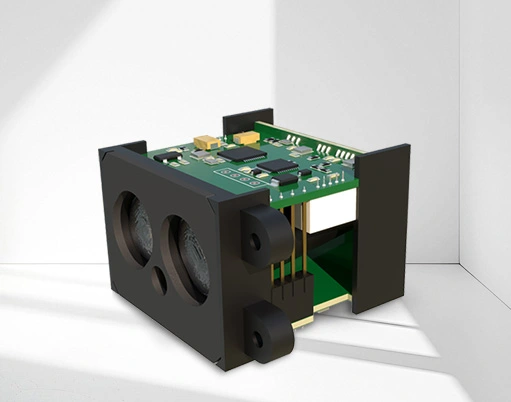

Electronic and processing capabilities form the backbone of measurement precision. The resolution of the internal clock that times the laser pulse's journey must be exceptionally high. Even a nanosecond of timing error can translate to a distance error of approximately 15 centimeters. Modern rangefinders utilize sophisticated timing circuits and signal processing algorithms to filter out noise and precisely identify the true return pulse among potential background interference.

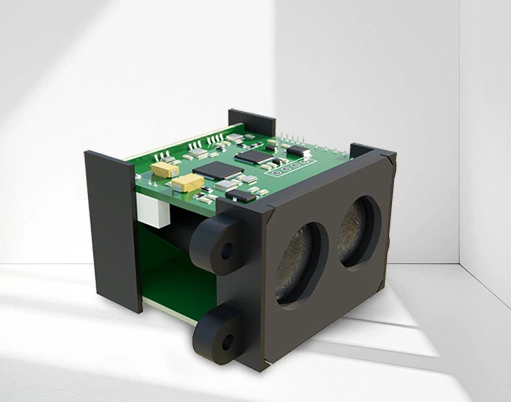

To improve laser rangefinder sensor accuracy, several strategies are employed. Selecting a sensor with a specification that matches the application's needs is the first step. For high-precision tasks like industrial alignment or archaeological mapping, a sensor with millimeter-level accuracy and high sampling rates is necessary. For longer-range outdoor use, such as in forestry, a sensor with powerful pulse technology and robust environmental sealing is more appropriate.

Regular calibration against known distances is a fundamental practice for maintaining accuracy over time. User technique also significantly impacts results. Ensuring the device is held steady, using a tripod for long-range measurements, and targeting the most reflective part of an object can greatly enhance reliability. For challenging surfaces, applying a reflective target plate can guarantee a strong return signal.

Integration with other systems, such as inertial measurement units (IMUs) or global navigation satellite systems (GNSS), can further refine accuracy, especially on moving platforms. These systems help correct for the sensor's own movement and orientation during measurement. Finally, leveraging software that applies advanced filtering, averaging of multiple measurements, and error correction algorithms can extract the highest possible precision from the hardware.

In conclusion, laser rangefinder sensor accuracy is a multifaceted attribute governed by hardware quality, environmental interaction, target properties, and signal processing sophistication. By comprehending these influencing factors and implementing best practices in selection, calibration, and operation, users can consistently achieve the reliable, precise measurements required for their critical applications. Continuous advancements in laser technology and digital processing promise even greater levels of accuracy and robustness in the future.